딥러닝 기반 BeautyGAN 분석을 통한 메이크업 이미지 최적화 추천시스템 적용

Application of Makeup Image Optimization Recommendation System through the Analysis of BeautyGAN Based on Deep Learning

Article information

Trans Abstract

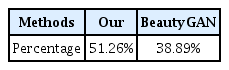

The purpose of this study was to identify the makeup preference of users and suggest a method to optimize the makeup style by using the preferred image for each age group through the analysis of BeautyGAN. Through this, you can propose a customized makeup style that suits you, and provide beneficial services to the makeup industry and consumers. In addition, by developing and validating new methods that effectively combine deep learning and vision systems, we aim to innovate makeup-related image conversion technology and contribute to academic and practical advances in this field. For this purpose, reference images suitable for each image were collected to implement image optimization for each age group, the input data reflected the researcher’s image, and the face was aligned and resized, after removing images with low resolution or poor lighting conditions. As a result of the performance evaluation of the BeautyGAN model, it was confirmed that the existing image was 51.26%, which is close to the BeautyGAN image of 38.89%. These results are judged to be able to provide customized makeup style suggestions or adjusted makeup effects that reflect the user’s preferences from an academic point of view, and from a practical point of view, it will be possible to improve the quality of customized beauty services by suggesting makeup styles that suit the characteristics of customers more accurately and quickly.

Ⅰ. Introduction

Artificial Intelligence, the main technology of the Fourth Industrial Revolution; AI, Big Data, Internet of Things (IoT), Augmented Reality and Virtual Reality (AR, VR) and NFTs (Non-Fungible Tokens) are accelerating digital transformation and triggering paradigm shifts in various areas of society. In line with these technological changes, innovations and changes are also taking place in the fields of education, culture, and art, and in particular, artificial intelligence technology is growing rapidly due to government support, the entry of large companies, the emergence of related companies, and the increase in demand from industries (Kim & Kim, 2022).

Generative Adversarial Networks (GAN) is one of the artificial intelligence technologies that has been showing remarkable results in recent years. GANs are made up of two neural networks that learn adversely: a generator that generates false data that is similar to real data, and a discriminator that distinguishes the false data generated by the generator from real data. In this way, GANs can learn the distribution of real data and generate new data. GANs are used in various fields such as image, speech, and text, and a lot of research is being done, especially in the field of image generation and conversion. GANs can be used to create various types of images, such as human faces, landscapes, and artworks, and complex.

Recently, various design tools based on deep learning technologies such as GANs have been developed, and artificial intelligence is expanding to the creative field, which has been considered to be the exclusive domain of humans (Kim et al., 2017; Rostamzadeh et al., 2018). These technical advantages of GANs are also being used in the ever-evolving beauty industry. The beauty industry is an industry that provides a variety of services related to a person’s appearance, such as hair, makeup, nails, skin, and cosmetics, to make the human body healthy and beautiful. In particular, makeup is the most efficient way to improve your appearance, create an image, and express your personality. However, in order to apply makeup, you need to choose the right cosmetics for your face shape and color, control the right shade and amount, and learn various techniques. This process takes a lot of time and money without the help of a professional, and it’s difficult to find the right makeup style for you. Therefore, consumers prefer customized services that can meet their personalized beauty needs. BeautyGAN is a technology that responds to the needs of these consumers and applies makeup to the face in the photo by performing makeup transformation using an adversarial generative neural network (Li et al., 2018; Lim, 2022).

Looking at the papers related to BeautyGAN, Lim (2022) studied the makeup transformation method using the facial segmentation loss function based on BeautyGAN, and Hwang et al. (2023) applied image-based artificial intelligence technology (object detection, object segmentation, and BeautyGAN to generate data-based quantitative criteria, and proposed a cosmetics recommendation web service suitable for personal color). Tong et al. (2007) conducted a study on the automatic transfer of makeup styles to new facial images, and Xu et al. (2013) proposed an automatic makeup framework that applies makeup and skin improvements to the face. Li et al. (2018) proposed the use of deep learning and GANs for facial makeup transfer at the instance level, while Hong & Siyu (2022) proposed MakeupGAN, a new framework for makeup editing. Based on previous research, this study identifies the makeup preferences of users using the preferred images for each age group through BeautyGAN analysis, and suggests a method to optimize the makeup style of BeautyGAN based on this. Through this, you can propose a customized makeup style that suits you, and provide beneficial services to the makeup industry and consumers. In addition, by developing and validating new methods that effectively combine deep learning and vision systems, we aim to innovate makeup-related image conversion technology and contribute to academic and practical advances in this field.

Ⅱ. Theoretical Background

1. Latest technoloy trends in image generation and transformation using GANs

Generative Adversarial Networks (GANs) are a technology in which artificial neural networks receive random inputs to generate new images of the desired category or convert existing images into different forms or styles, and it is a field that has received a lot of attention and is being actively researched in recent years. GANs are technologies that generate realistic image data by learning from generative and discriminative neural networks in competition with each other, and are also applied to real-world services in the design field that uses a lot of image editing (Goodfellow et al., 2020; Jo et al., 2020). Since GANs were first proposed in 2014, a lot of research has been done to apply them to various fields. In addition to image creation, GANs can be used for a variety of tasks, including image editing, conversion, restoration, compositing, recognition, search, compression, and security. GANs can also be applied to other forms of data, such as voice, text, video, music, graphics, games, animations, 3D models, and code.

GANs have many advantages, but there are still problems that need to be addressed. GANs are unstable in training, difficult to evaluate in performance, and require a lot of memory and computation. In addition, it is difficult to produce high-resolution images and takes a long time to train. In order to solve these problems, various GAN modifications and improvements are being studied. For example, GAN models such as WGAN, EBGAN, BEGAN, CycleGAN, StarGAN, StyleGAN, SAGAN, SRGAN, Pix2Pix, and Pix2PixHD are proposed. WGAN (Wasserstein GAN is an improved model for measuring the distance between the constructor and the discriminator in order to address the learning instability of the GAN (Arjovsky et al., 2017). Energy-based GANs (EBGANs) are models that define the discriminator as an energy function, and the constructor learns by finding the minimum value of the energy function, and BEGAN (Boundary Equilibrium GAN) is a model based on EBGAN that adaptively adjusts the objective function to maintain a balance between the constructor and the discriminator (Wei et al., 2017; Berthelot et al., 2017). CycleGAN enables style transformation between unpaired images (Zhu et al., 2020), and StarGAN is a model that can be trained to transform images between different domains into one model, which can transform hairstyles, gender, age, etc. (Choi et al., 2018). StyleGAN can produce high-resolution images of faces (Karras et al., 2019), and Super-Resolution GANs (SRGANs) are models that restore low-resolution images to high-resolution, combining hostile loss with content loss, which can improve image quality and clarity (Ledig et al., 2017). Pix2Pix is a model that learns transformations between paired images, and Pix2PixHD extends Pix2Pix to learn transformations between high-resolution images, and can maintain the detail features and consistency of images using multi-scale constructors and discriminators (Isola et al., 2017; Wang et al., 2018). GAN is an innovative technology of artificial intelligence that improves the quality and variety of images and enhances user creativity and convenience, and has a lot of potential for image generation and transformation.

2. Deep learning-based makeup image recommendation system

With the recent development of Information Communication Technology (ICT), services using mobile devices have become more diverse, and the era of personalization has arrived in order to effectively provide appropriate information to users (Perera et al., 2013; Li et al., 2015; Byun, 2017; Lee, 2017). In particular, with the development of new technologies using artificial intelligence, the makeup process is also innovatively changing.

The deep learning-based makeup image recommendation system is a system that receives the user’s personal preferred image as input and recommends a suitable makeup style. This system proposes a new method that is different from the existing makeup recommendation system. Makeup effects are applied to faces in photos using GANs, and image pre- and post-processing techniques are developed to solve problems such as noise, distortion, and color change to improve the quality and variety of makeup images. In addition, since the makeup style is recommended based on the image that the user actually prefers, the user’s satisfaction is high and can reflect their own taste and characteristics, thus satisfying the user’s personalized beauty needs. Not only makeup styles, but also hairstyles, fashion, accessories, etc., can be converted and recommended, providing more diverse and abundant beauty services.

Beauty applications that utilize deep learning technology to perform tasks such as face recognition, skin analysis, and makeup style extraction, and recommend optimized makeup images based on users’ preferences, characteristics, and feedback include BeautyPlus, YouCam, Makeup, SNOW and B612. These applications use artificial intelligence, augmented reality, and GAN algorithms to virtually test makeup on your own face. When taking or editing the self-camera, it provides a variety of filters, corrections, and makeup effects, and suggests suitable makeup colors or patterns by analyzing skin tone, face shape, eye color, hair color, etc. In addition, Makeup products such as lipstick, eyeshadow, and blush can be changed or adjusted, and users can choose the makeup products they want and adjust the color, brightness, transparency, etc., to create a makeup style. In the case of Perfect Corporation, a leading virtual try-on technology company in the United States, it is applied not only to makeup, but also to various fields (Ban, 2022). These technologies are playing an important role in accelerating the digital transformation of the beauty industry, and they are gaining more attention as the importance of non-face-to-face consumer experiences has been highlighted during the pandemic. It helps consumers use products safely and makes purchasing decisions, and provides beauty brands with new marketing strategies.

Ⅲ. Methodology

1. Research

GANs are basically a technology that uses two opposing neural networks to generate new data. In this study, a set of images of faces with and without makeup is used. When a set of non-makeup faces is called X and a set of make-up faces is called Y, each image is an individual image belonging to a set of X or Y (Zhen et al., 2017). In other words, it deals with the transfer and transformation of styles between makeup and non-makeup faces, and in this process, the identity of the face must be maintained, which can be explored how to add or remove makeup to the face image using GAN technology.

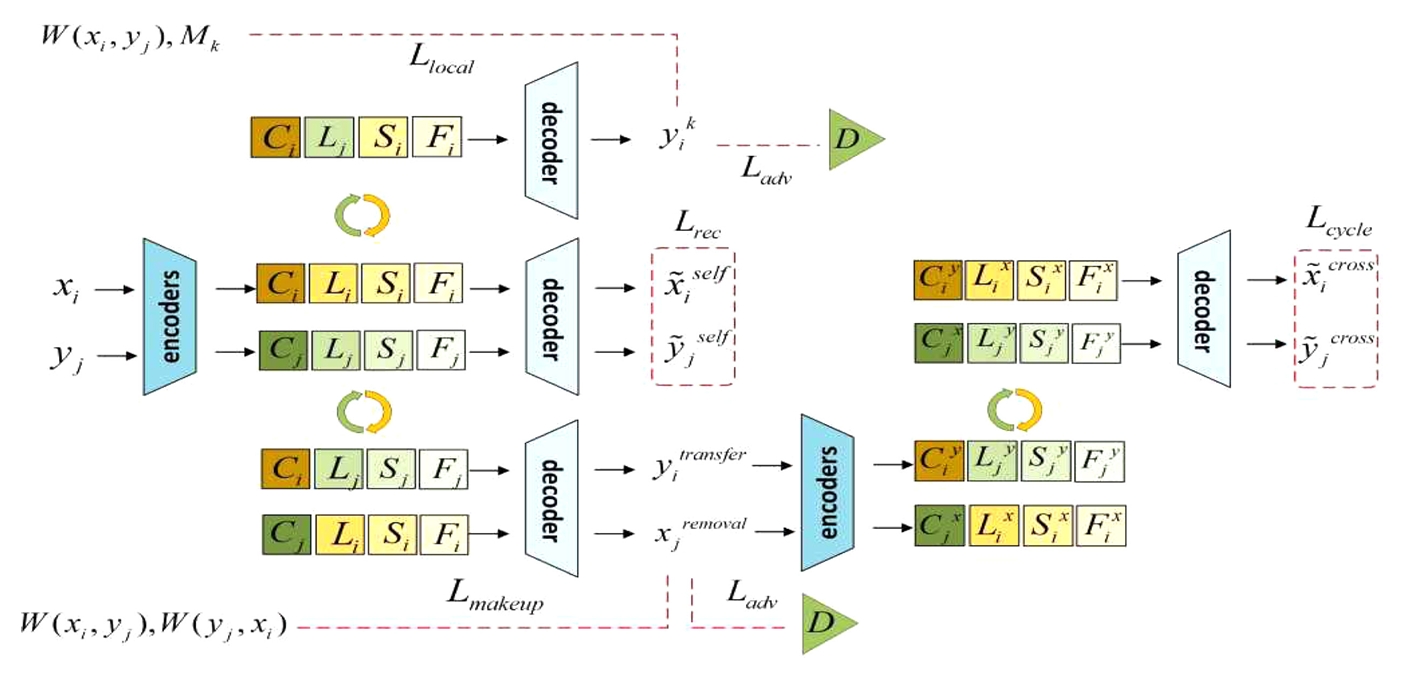

First, as shown in the following <Fig. 2> the variables are entered directly into the decoder to derive

Architecture of our whole network

(Source: Sun, Z., Liu, F., Liu, W., Xiong, S., & Liu. W. (2020). Local facial makeup transfer via disentangled representation. Proceeding of the Asian Conference on Computer Vision, pp. 459-473. DOI: 10.1007/978-3-030-69538-5_28)

2. Makeup transfer and removal

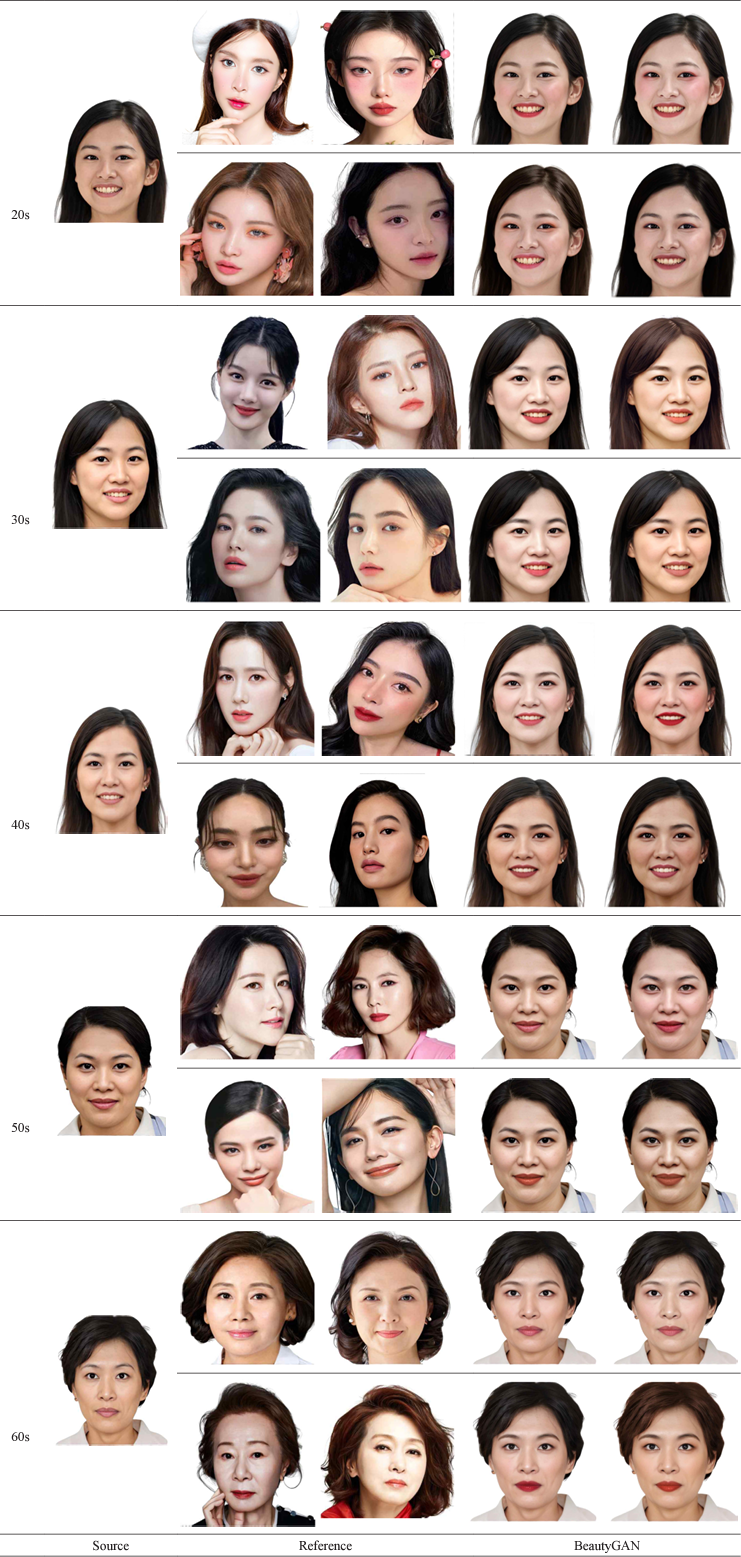

An encoder system consists of several parts. It consists of 20 encoders {ET}, 30s {EH}, 40s {EO}, 50s {EF}, 60s {ES}, and decoders {G}. First, extract the makeup image style for each age group from the makeup and non-makeup images. This is entered into the decoder G to generate

3. Local makeup transfer

In order to implement the age-specific image optimization proposed in this study, two things are required: first, the generated result must have the same makeup style as the reference make-up image in the specified semantic region, and secondly, the other semantic domain of the generated result must be identical to the image without makeup, in which case the loss function is used. The local make-up loss function divides the image according to its meaning and induces the separation of latent variables instead of entering it into the corresponding encoder. Face parsing is only used for network training to calculate loss functions, not for testing.

IV. Results and Discussion

1. Data collection

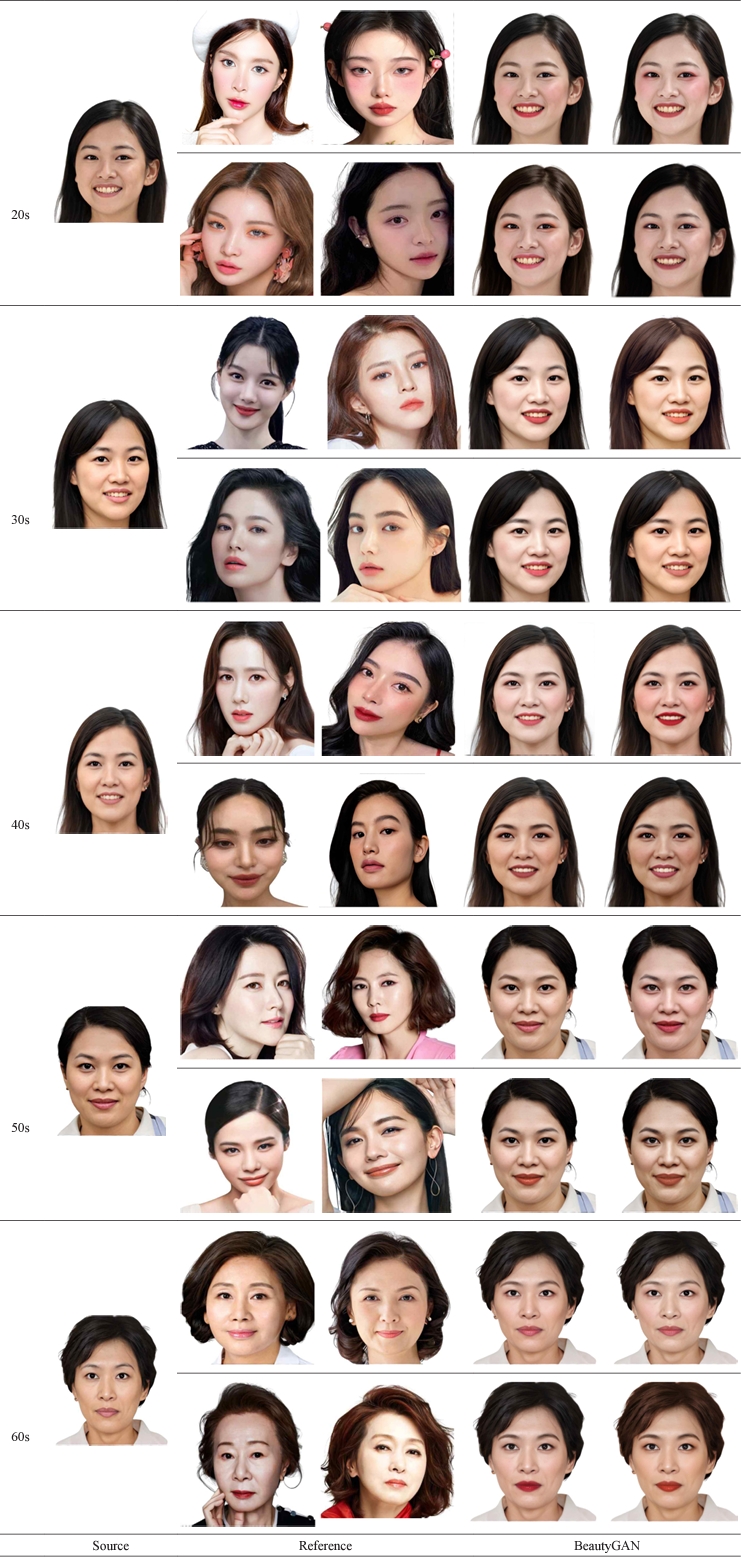

For data collection, reference images for each image were collected for each age group (20, 30, 40, 50, and 60s) to implement image optimization. The input data reflected the researcher’s images, and the reference data was collected from the website (https://www.pinterest.co.kr/), and the photos collected from the website were used after removing images with low resolution or poor lighting conditions, and then adjusting the faces and resizing.

In the experiment, the image is scaled to 286×286, then randomly cropped to 256×256 and flipped horizontally with a 50% chance to augment the data. They also set different weights to balance different goals, especially small areas like the lips and eyes. For network training, an Adam optimization algorithm is used, and it is trained for a total of 1000 epochs, with a batch size of 1 and a fixed learning rate of 0.0002. Add a skip connection between the encoder and decoder to include more identity details, and latent variables are connected along the channel at the bottleneck (Gu et al., 2019). This will help improve the quality of the image and convey the effect of makeup.

2. Local makeup loss assessment

In this study, what is entered into each makeup encoder is the same original makeup reference image, and the proposed local makeup loss function allows the various encoders to learn different information, forces the separation of information, and in order to evaluate the effect of local makeup loss, an experiment was conducted in which this loss function was removed. This study shows that the local makeup loss function forces different encoders to learn different makeup information, and promotes the separation of local makeup information. In other words, it shows the importance of technology that conveys the makeup effect, and if you remove it, you remove the local makeup effect. Therefore, the results show that it is important to precisely target specific areas that are makeup points for each age group.

3. BeautyGAN model evaluation

In this study, the evaluation was performed using BeautyGAN as shown in the following <Table 2>. Histogram match loss was used to produce visually realistic transmission results. In other words, lipstick, eyeshadow, and foundation are showing a strong match to the makeup style of the reference image. This shows that the makeup transfer method of this study provides better results compared to other methods. In other words, it can be meaningful to reproduce a more accurate and realistic make-up effect. In addition, in the performance evaluation, the existing image was 51.26%, It can be confirmed that it is approaching the BeautyGAN image of 38.89%. This means that it reflects realistic results.

4. Makeup removal results

In general, the use of cosmetics does not allow you to expect a natural appearance of the face. When restoring the non-make-up effect from a makeup image, various results can be obtained, and in this study, makeup removal was applied as an unsupervised image conversion problem. As a result, by feeding makeup components from non-makeup images to the decoder, we were able to achieve a number of realistic makeup removal results. In other words, removing makeup from a makeup image is not just about getting rid of makeup, but it shows that approaching the problem of image conversion with conditions can be more realistic and diverse results.

5. Interpolated makeup transfer results

Interpolation is the process of creating various intermediate stages of makeup styles to achieve results, and ‘interpolation’ refers to the technique of mixing two or more makeup styles to create a makeup effect with several stages in between. In this study, not only local makeup transmission but also the degree of local makeup style can be flexibly controlled. The formula for local makeup styles is as follows:

αL, αS,αF is a weighted value in the range of [0, 1], which controls the degree of makeup style, then sets αL, αS,αF to the same value and produces an overall interpolated result. Next, we fixed two at 0 or 1 and gradually changed the other one, confirming that no matter what kind of interpolation transmission we do, the encoder can produce smooth, realistic results.

As shown in <Table 3>, I effectively changed the local makeup style and did not alter the rest of the face image. This explains that not only can you accurately transfer your local makeup style, but you also have the flexibility to adjust the degree of makeup. This means that you can adjust the intensity of your makeup as much as you want, so you can gently adjust the intensity of your makeup and get a realistic makeup effect in the style you want.

6. Interpolated makeup transfer results

In this study, the goal is to maintain the identity of the face by mixing non-makeup images. This must meet higher requirements for the degree of separation of information and the effectiveness of the encoder. The formula for this is as follows:

Lp, Sq and Fr presents a latent image of the face, a technique that focuses on maintaining the true identity of the face while accurately blending individual makeup styles. This is a challenging task that requires a high degree of technical sophistication.

Examining the results of the analysis, it is possible to not only flexibly control the degree of local makeup style through the separation of facial image information, but also to transfer the local makeup style from other images to the result. When it comes to makeup removal, we were able to combine makeup transliteration and removal into one unified framework, resulting in multiple makeup removals. In addition, the above results were compared to other studies to verify their effectiveness, resulting in satisfactory results. For further research, we want to subdivide facial images into individual identities and different makeup styles, so that local makeup styles can be controlled and transmitted more precisely and flexibly, which can provide an integrated framework to effectively deal with various makeup-related issues, including makeup removal. In addition, the ability to generalize in different lighting conditions and complex scenarios will also have to be tested.

Ⅴ. Results

The purpose of this study was to optimize the preferred makeup image for each age group using BeautyGAN. BeautyGAN consists of an encoder and decoder that generate images, and the performance of the generator is optimized by combining makeup loss. Through this, it was possible to achieve an accurate makeup image like the original photo without changing the shape of the face. The academic and practical implications of this study are as follows.

First, the academic implications are as follows. The first is the advancement of advanced image analysis and processing. This study offers a new approach to analyzing individual makeup elements and individual identities separately. This method could provide a more detailed analysis of the characteristics of complex images and provide new directions for research, especially in relation to facial recognition. In addition, this approach, which treats the various components of an image individually, will not only contribute to the development of image processing technology, but will also play an important role in personalized image recognition and analysis. Second, it can enable a high degree of sophistication in the analysis and transmission of makeup styles. Deep learning is a powerful tool for recognizing and learning complex patterns in images, and vision systems provide the ability to convert these patterns into concrete image elements, which are essential for accurately identifying specific styles or features of makeup and transferring them to other images. It has great potential in the field of personalized image processing and analysis, and it is believed that it can provide customized makeup style suggestions that reflect the specific needs and preferences of users, or makeup effects that are tailored to the characteristics of the individual’s face, and furthermore, it is expected to be applied in various fields such as the beauty industry, digital advertising, and social media content production. Third, there are various applications of artificial intelligence technology. This study explores the various application possibilities of artificial intelligence by applying artificial intelligence technology to the field of makeup and beauty. This is an example of how artificial intelligence can go beyond simple data analysis and prediction and can be used in creative and artistic fields. In other words, it is expected to open up new application areas of artificial intelligence technology and open up the possibility of using artificial intelligence to solve a wider range of problems.

On the other hand, the practical implications are as follows. First, it has applications in the makeup industry. This study is believed to bring about revolutionary changes in the makeup industry. For example, personalized makeup suggestions can recommend makeup styles to consumers that match their facial features, which can be done by providing a platform where consumers can upload photos of their faces and try on different makeup styles virtually. Virtual makeup simulations will also give consumers the opportunity to try out different colors and styles before actually using makeup products. This is especially useful in online shopping environments, and will help consumers make more informed decisions before purchasing a product. In other words, these technologies can also provide new tools for makeup artists and beauty professionals, who can use this technology to more accurately and quickly suggest makeup styles that match the characteristics of their customers, which will improve the quality of personalized beauty services.

Second, the contribution of digital content creation. Especially in the film, advertising, and game industries, when used to design or modify a character’s makeup, it helps to make the character’s appearance more realistic and detailed, which will allow directors or designers to make the character’s visuals richer and more colorful. In addition, when combined with new technologies such as virtual reality and augmented reality, it can provide a more immersive experience, allowing creators to more effectively convey a character’s personality and story, and create visually appealing content. Third, it is to improve the consumer experience. This study can improve the consumer experience by providing an environment where consumers can easily try different makeup styles. In particular, the importance of this technology in online shopping and virtual reality environments will be emphasized. Consumers will be able to try on different makeup styles from the comfort of their own homes, which will inform their purchasing decision-making process. In addition, by using this technology in a virtual reality environment, users can experience a variety of styles as if they were actually wearing makeup, which is expected to increase consumer satisfaction and strengthen brand loyalty.

Deep learning-based makeup image recommendation system

(Source: https://www.aitimes.kr/ https://www.perfectcorp.com/ https://www.vogue.co.kr/)